Jailbreaking ChatGPT

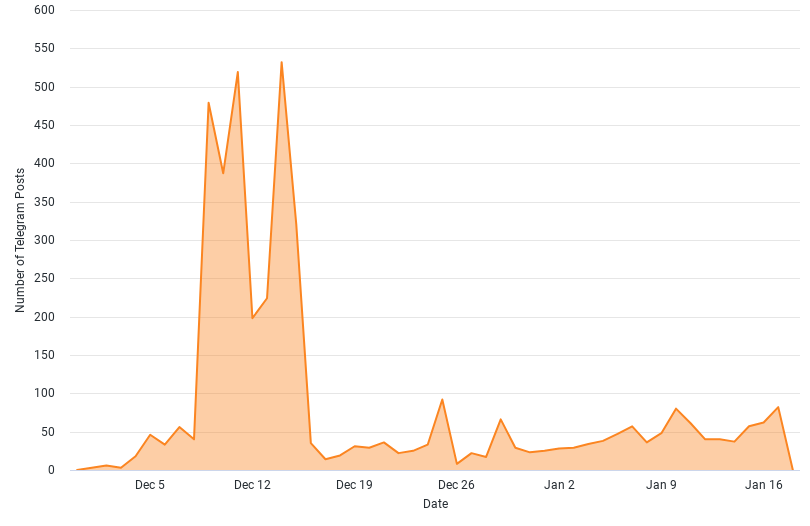

Since OpenAI released ChatGPT, the world has been ravenously exploring its possibilities, recording 100 million monthly active users just two months from its launch in November 2023. Among them are threat actors, who are actively jailbreaking ChatGPT’s built-in restrictions and testing its limits to generate malicious code and exploit payloads.

The sophistication of current and near-future artificial intelligence (AI) generated attacks, however, are low. The code we’ve observed tends to be very basic or bug ridden: we’ve yet to observe any attacks leveraging advanced, or previously-unseen code generated from the AI tool. Despite this, the promise of the AI models, specifically ChatGPT, still poses a major risk to organizations and individuals, and will challenge security and intelligence teams for years to come.

Let’s explore.

The illicit code and content that ChatGPT helps to create

These uses are occurring alongside previously reported illicit uses, including:

- Excessively violent and sexual content

- Instructions on how to create illegal substances and chemical weapons

- Violent and graphic propaganda with the purpose of misinformation

- Credible social engineering scripts

Flashpoint has also observed the following technical uses of ChatGPT, with threat actors using the AI tool to generate:

- Trojans and Trojan Binders

- SMS Bombers

- Ransomware code

- Malicious code capable of exploiting vulnerabilities

Although threat actors using ChatGPT indeed have the capability to generate malicious code and exploit payloads, Flashpoint assesses that in its current state, the code produced by ChapGPT tends to be basic or bug ridden; we have yet to observe any attacks leveraging advanced or previously-unseen code generated from AI.

Organizations need to be aware that ChatGPT, as well as other AI models have a fundamental limitation—they all rely on existing data to generate a response—therefore, current technology prevents AI models from “creating” innovative code or novel techniques.

Still, despite these limitations, threat actors are leveraging ChatGPT in innovative ways, which poses a very real risk.

ChatGPT as a force multiplier for low-level attacks

Our analysts assess that ChatGPT will lower the barrier to entry for basic hacking tasks, such as scanning open ports, crafting phishing emails, and deploying virtual machines for low-sophisticated threat actors. This means that the current ails afflicting the security landscape—i.e., misconfigured databases and services, phishing, and the exploitation of vulnerabilities—will become even harder to defend against.

Misconfigured databases and services

Misconfigurations continue to be a common access point for threat actors, being responsible for over 16 billion stolen credentials and personal records in 2022. Adversaries use exposed personal records to inform phishing campaigns, and stolen credentials are leveraged in credential stuffing, brute-forcing, and other cyberattacks. Malicious actors already have quick access to tools that allow them to search the internet for easily-accessible databases, and with the assistance of AI models, it is likely that this long-standing problem will worsen.

Social engineering

Flashpoint has observed multiple threat actors using ChatGPT and other AI models to generate credible phishing emails. In our State of Cyber Threat Intelligence 2023 report, we showed how phishing continues to be a primary attack vector for ransomware and other cyber attacks.

State of Cyber Threat Intelligence: 2023

ChatGPT’s ease of use, as well as the recent GPT-4 update, will allow non-English speaking threat actors to create a plethora of well-written messages, making it easier for them to focus on sending emails to as many targets as possible, rather than spending hours writing them.

Exploiting vulnerabilities

While the fundamental limitation of AI will likely not result in the actual creation of never-before-seen code, security teams will be even more hard-pressed to defend against easy-to-exploit vulnerabilities.

Last year, Flashpoint collected over 26,900 vulnerabilities and over 55 percent of them are remotely exploitable. ChatGPT is already capable of writing Proof-of-Concepts and our analysts observed threat actors using ChatGPT to write malicious code in python, using Metasploit framework, to exploit a known vulnerability in Android to gain remote access.

As such, despite its current level of sophistication, scenarios like these suggest that IT security teams will need to be proficient in prioritizing vulnerabilities, else they suffer an inundation of noise. Additionally, the use of AI-assisted exploitation could potentially widen the impact of vulnerabilities that affect a wide range of products, while being easy to exploit—such as Log4Shell.

Protect your organization with Flashpoint

Flashpoint assesses that it is highly likely that the use of AI in cybercrime will continue to grow as the technology advances and becomes more readily available to users around the world. Our analysts will continue to monitor changes in the AI landscape and how threat actors are adapting to new and emerging technologies. Learn more, or sign up for a free trial to see how Flashpoint is helping security teams achieve their mission daily.